Here in Belgium people have been receiving an Electronic Identity Card (EID) for years now. Every once in a while I have a customer who asks me whether this card can be used to logon to workstations. That would mean a form of strong authentication is applied. The post below will describe the necessary steps in order to make this possible. It has been written using a Belgian EID and the Windows Technical Preview (Threshold) for both client and server.

In my lab I kept the infrastructure to a bare minimum.

The Domain Controller(s) Configuration

Domain Controller Certificate:

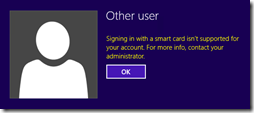

You might wonder why I included a certificate authority in this demo. Users will logon using their EID and those cards come with certificates installed that have nothing to do with your internal PKI. However, in order for domain controllers to be able to authenticate users with a smart card, they should have a valid certificate as well. If you fail to complete this requirement, your users will receive an error:

In words: Signing in with a smart card isn’t supported for your account. For more info, contact your administrator.

And your domain controllers will log these errors:

In words: The Key Distribution Center (KDC) cannot find a suitable certificate to use for smart card logons, or the KDC certificate could not be verified. Smart card logon may not function correctly if this problem is not resolved. To correct this problem, either verify the existing KDC certificate using certutil.exe or enroll for a new KDC certificate.

And

In words: This event indicates an attempt was made to use smartcard logon, but the KDC is unable to use the PKINIT protocol because it is missing a suitable certificate.

In order to give the domain controller a certificate, that can be used to authenticate users using a smart card, we will leverage the Active Directory Certificate Services (AD CS) role on the WS10-CA2 server. This server is installed as an enterprise CA using more or less default values. Once the ADCS role is installed, your domain controller should automatically request a certificate based upon the “Domain Controller” certificate. This is a V1 template. A domain controller is more or less hardcoded to automatically request a certificate based upon this template.

In my lab this certificate was good enough to let my users authenticate using his EID. After restarting the KDC service and performing the first authentication, the following event was logged though:

In words: The Key Distribution Center (KDC) uses a certificate without KDC Extended Key Usage (EKU) which can result in authentication failures for device certificate logon and smart card logon from non-domain-joined devices. Enrollment of a KDC certificate with KDC EKU (Kerberos Authentication template) is required to remove this warning.

Besides the Domain Controller template there’s also the more recent Domain Controller Authentication and Kerberos Authentication templates which depend on auto-enrollment to be configured.

Computer Configuration > Policies > Windows Settings > Security Settings > Public Key Policies

After waiting a bit, gpupdate and/or certutil –pulse might speed things up a bit, we got our new certificates:

You can see that the original domain controller certificate is gone and replaced by its more recent counterparts. After testing we can confirm that the warning is no longer logged in the event log. We have now covered the certificate the domain controller requires, we’ll need to add a few more settings on the domain controllers for EID logons to work.

Domain Controller Settings

Below HKLM\SYSTEM\CurrentControlSet\Services\Kdc we’ll create two registry keys:

Strictly spoken, the last one shouldn’t be necessary if your domain controller can reach the internet, or at least the URL where the CRL’s used in the EIDs, are hosted. If you use this registry key, make sure to remove a name mapping (more on that later) or disable the user when the EID is stolen or lost. An easy way to push these registry key is using group policy preferences.

Domain Controller Trusted Certificate Authorities

In order for the domain controller to accept the EID of the user, the domain controller has to trust the full path in the issued certificate. Here’s my EID as an example:

We’ll add the Belgium Root CA2 certificate to the Trusted Root Certificate Authorities on the domain controller:

Computer Configuration > Policies > Windows Settings > Security Settings > Public Key Policies > Trusted Root Certification Authorities

And the Citizen CA to the Trusted Intermediate Certificate Authorities on the domain controller:

Computer Configuration > Policies > Windows Settings > Security Settings > Public Key Policies > Intermediate Certification Authorities

Now this is where the first drawback from using EIDs as smartcards comes: there are many Citizen CA’s to add and trust… Each month, sometimes more, sometimes less, a new Citizen CA is issued and used to sign new EID certificates. You can find them all here: http://certs.eid.belgium.be/ So instead of using a GPO to distribute them, scripting a regular download and adding them to the local certificate stores might be a better approach.

The Client Configuration

Settings

For starters we’ll configure the following registry keys:

Below HKLM\SYSTEM\CurrentControlSet\Control\Lsa\Kerberos\Parameters we’ll create two registry keys:

Again, if your client is capable of reaching the internet you should not need these. I have to admit that I’m not entirely sure how the client will react when a forward proxy is in use. After all, the SYSTEM doesn’t always know what proxy to use and it might be requiring to authenticate.

Besides the registry keys, there’s also some regular group policy settings to configure. In some articles you’ll probably see these settings also being pushed out as registry keys, but I prefer to use the “proper” settings as they are available anyhow.

Computer Settings > Policies > Administrative Templates > Windows Components > Smart Cards

These two are required so that the EID certificate can be used. As you can see it has a usage attribute of Digital Signature

In some other guides you might also find these Smart Card settings enabled:

-

Force the reading of all certificates on the smart card

-

Turn on certificate propagation from smart card

-

Turn on root certificate propagation from smart card

But my tests worked fine without these.

Drivers

Out of the box Windows will not be able to use your EID. If you don’t install the required drivers you’ll get an error like this:

You can download the drivers from here: eid.belgium.be On the Windows 10 preview I got an error during the installation. But that probably had to do with the EID viewer software. The drivers seem to function just fine.

Active DIrectory User Configuration

As these certificates are issued by the government, they don’t contain any specific information that allows Active Directory to find out to which user should be authenticated. In order to resolve that we can add a name mapping to a user. And this is the second drawback. If you want to put EID authentication in place you’ll have to have some sort of process or tool that allows users to link their EID to their Active Directory User Account. The helpdesk could do this for them or you could write a custom tool that allows users to do it themselves.

In order to do it manually:

First we need the certificate from the EID. You can use Internet Explorer > Internet Options > Content > Certificates

You should see two certificates. The one you want is the one with Authentication in the Issued To. Use the Export… button to save it to a file.

Open Active Directory Users and Computers > View > Advanced Features

Locate the user the EID belongs too > Right-Click > Name Mappings…

Add an X.509 Certificate

Browse to a copy of the Authentication smart card which can be found on the EID

Click OK

Testing the authentication

You should now be able to logon to a workstation with the given EID. Either by clicking other user and clicking the smart card icon

Or if the client has remembered you from earlier logons you can choose smart card below that entry.

An easy way to see if a user logged on using smart card or username/password is the query for the user his group memberships on the client. When users log on with a smart card they get the This organization certificate group SID added to their logon token. This is a well-known group (S-1-5-65-1) that was introduced with Windows 7/ Windows 2008 R2.

Forcing smart card authentication

Now all of the above allows a user to authenticate using smart cards, but it doesn’t forces the user to do it. Username password will still be accepted by the workstations. If you want to force smart card logon there are two possibilities. Each with their own drawbacks.

1. On the user level:

There’s a property Smart card is required for interactive logon that you can check on the user object in Active Directory. Once this is checked, the users will only be able to logon using a smart card. There’s one major drawback though. Once you click apply, at the same time this will set the password of that user to a random value and password policies will no longer apply for that user. That means that if you got some applications that are integrated with Active Directory, but do so by asking credentials in a username/password form, your user will not be able to logon as they don’t know the password… If you configure this setting on the user you have to make sure all applications are available through Kerberos/NTLM SSO. If you were to use Exchange Active Sync, you would have to change the authentication scheme from username/password to certificate based for instance. So I’m not really sure enforcing this at the user level is a real option. This option seems more feasible for protection high privilege accounts.

2. On the workstation level:

There’s a group policy setting that can be configured on the computer level that enforces all interactive logons to require a smart card. It can be found under computer settings > Policies > Windows Settings > Security Settings > Local Policies > Security Options > Interactive logon: Require smart card

While you’re there, also look at Interactive logon: Smart card removal behavior. It allows you to configure a workstation to lock when a smart card is removed. If you configure this one, make sure to also configure the Smart Card Removal Policy service to be started on your clients. This service is stopped and set to manual by default.

Now the bad news. Just like with the first one, there’s also a drawback. This one could be less critical for some organisations, might it might require people to operate in a slightly different way. Once this setting is enabled, all interactive logons require a smart card:

-

Ctrl-alt-del logon like a regular user

-

Remote Desktop to this client

-

Right-click run as administrator (in case the user is not an administrator himself) / run as different user

For instance right-click notepad and choosing run as different user will result in the following error if you try to provide a username/password

In words: Account restriction are preventing this user from signing in. For example: blank passwords aren’t allowed, sign-in times are limited, or a policy restriction has been enforced. For us this is an issue as our helpdesk often uses remote assistance (built-in the OS) to help users. From time to time they have to provide their administrative account in order to perform certain actions. As the user his smart card is inserted, the helpdesk admin cannot insert his own EID. That would require an additional smart card reader. And besides that: a lot of the helpdesk tasks are done remotely and that means the EID is in the wrong client… There seem to be third party solutions that tackle this particular issue: redirecting smart cards to the pc you’re offering remote assistance.

Now there’s a possible workaround for this. The policy we configure in fact sets the following registry value to 1:

MACHINE\Software\Microsoft\Windows\CurrentVersion\Policies\System\ScForceOption

Using remote registry you could change it to 0 and then perform your run as different again. Changing it to 0 immediately sets the Interactive logon: Require smart card to disabled. Effective immediately. Obviously this isn’t quite elegant, but you could create a small script/utility for it…

Administrative Accounts (or how to link a smart card to two users)

If you would use the same certificate (EID) in the name mapping of two users in Active Directory, your user will fail to login:

In words: Your credentials could not be verified. The reason is quite simple. Your workstation is presenting a certificate to Active Directory, but Active Directory has two principals (users) that map to that certificate. Now which user does the workstation want?

Name hints to the rescue! Let’s add the following GPO setting to our clients:

Computer Settings > Policies > Administrative Templates > Windows Components > Smart Cards

After enabling this setting there’s an optional field called Username hint below the prompt for the PIN.

In this username hint field the person trying to logon using a smart card can specify which AccountName to be used. In the following example I’ll be logging on with my thomas_admin account:

NTauth Certificate Store

Whenever you read into the smart card logon subject you’ll see the NTauth certificate store being mentioned from time to time. It seems to be involved in some way, but it’s still not clear to me. All I can say is that in my setup, using an AD integrated CA for the Domain Controller certificates, I did not had to configure/add any certificates to the NTauth store. Not the Belgian Root CA, Not the Citizen CA. My internal CA was in it of course.

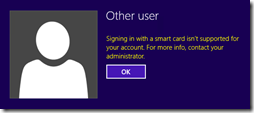

I did some tests, and to my experience, the CA that issued your domain controllers certificate has to be in the NTAuth store on both clients and domain controllers. If you would remove that certificate you’ll be greeted with an error like this:

In words: Signin in with a smart card isn’t supported for your account. For more info, contact your administrator. And on the domain controller the same errors are logged like the ones from the beginning of this article.

Some useful commands to manipulate the NTauth store locally on a client/server:

-

Add a certificate manually: certutil -enterprise -addstore ntAuth .\ThresholdCA.cer

-

View the store: certutil -enterprise -viewstore ntAuth

-

Delete a certificate: certutil -enterprise -viewdelstore ntAuth

Keep in mind that the NTauth store exists both locally on the client/servers and in Active Directory. An easy way to view/manipulate the NTauth store in Active Directory is the pkview.msc management console which you typically find on a CA. Right-click the root and choose manage AD containers to view the store.

A second important fact regarding the NTauth store. Whilst you might see the require CA certificate in the store in AD, your clients and servers will only download the content of the AD NTauth store IF they have auto-enrollment configured!

Summary:

There are definitely some drawbacks to using EID in a corporate environment:

-

No management software to link the certificates to the AD users. Yes there’s active directory users and computers, but you’ll have to ask the users to either come visit your helpdesk or email their certificate. Depending on the number of users in your organisation this might be a hell of a task. A custom tool might be a way to solve this.

-

Regular maintenance: as described, quite regular a new Citizen CA (Subordinate Certificate Authority) is issued. You need to ensure your domain controllers have this CA in their trusted intermediate authorities store. This can be done through GPO, but this particular setting seems hard to automated. You might be better off with a script that performs this task directly on your domain controllers.

-

Helpdesk users will have to face the complexity if the require a smart card setting is enabled.

-

If an EID is stolen/lost you might have to temporary allow normal logons for that user. An alternative is to have a batch of smart cards that you can issue yourself. An example vendor for such smart cards is Gemalto.

-

An other point that I didn’t had to chance to test though. What about the password of the users. If they can’t use it to logon, but the regular password policies still apply, how will they be notified of the expiration? Or even better, how will they change it? Some applications might depend on the username/password to logon.

As always, feedback is welcome!

1 comments